How AR can support adoption of AI in aircraft factory

.png)

Introduction

This article focuses on applying Machine Learning (ML) for making decisions based on visual information. A primary application of this in manufacturing is visual inspection. Finding defects, classifying them, and validating correctness post-repair are typical examples of what quality managers could use ML for.

It is so much easier said than done because when a legacy business is starting AI adoption from scratch it doesn't even know what to look at first. Challenges include what to analyze (use case), how to collect the data (process), where to store it securely (infrastructure, cybersecurity), how to analyze it (data science), and who will be responsible for all this (change management) to name a few.

Who is this for

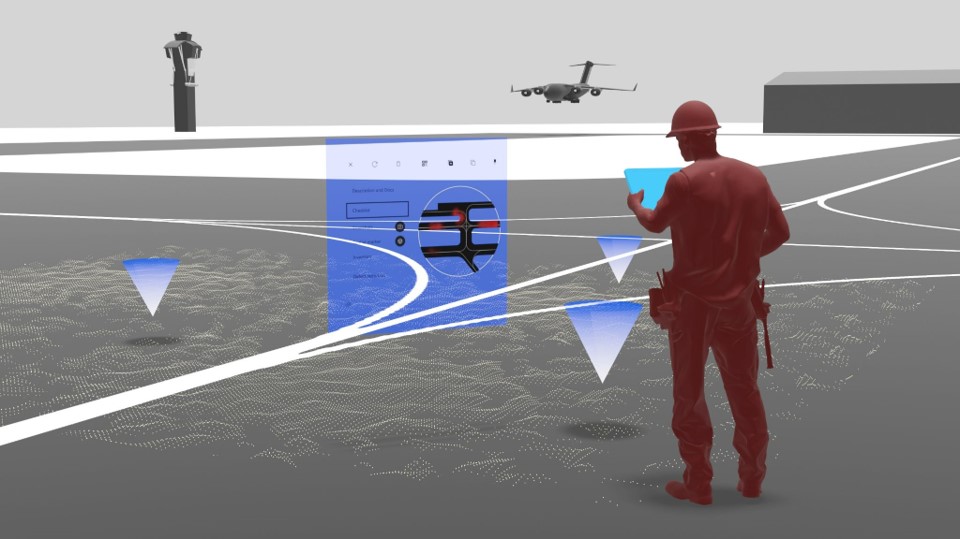

These ideas will work best for the factories manufacturing sizeable components or assembling large structures (aircraft, vessels, wind turbines) where quality is non-negotiable and qualified personnel spend a lot of time performing visual inspections of the parts. We wrote more extensively about how this can be applied in base maintenance for example.

This will not work if the components you are producing can fit on the table, if your production lines are fully automated, and the process is rarely interrupted by operators (car factory or bottling plant).

What AI can do for you?

Machine learning models are good at catching patterns. They analyze pictures and videos and tell you what they see in them based on how you trained them earlier. There are three broad use cases for this capability in the factory.

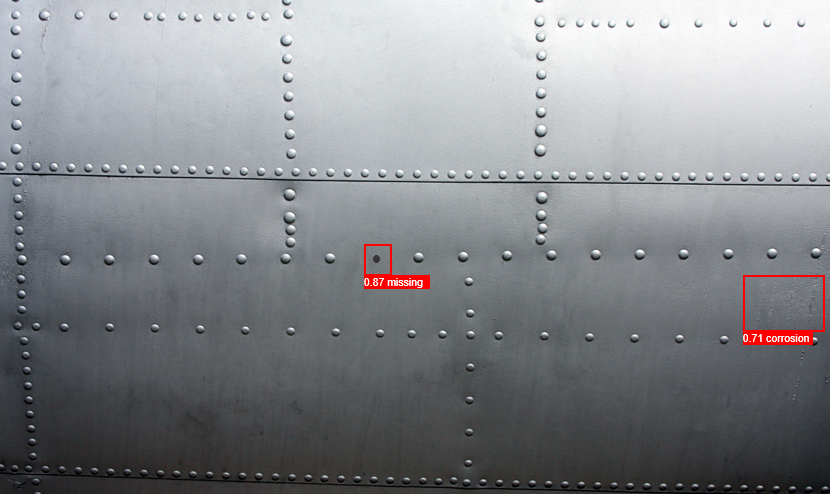

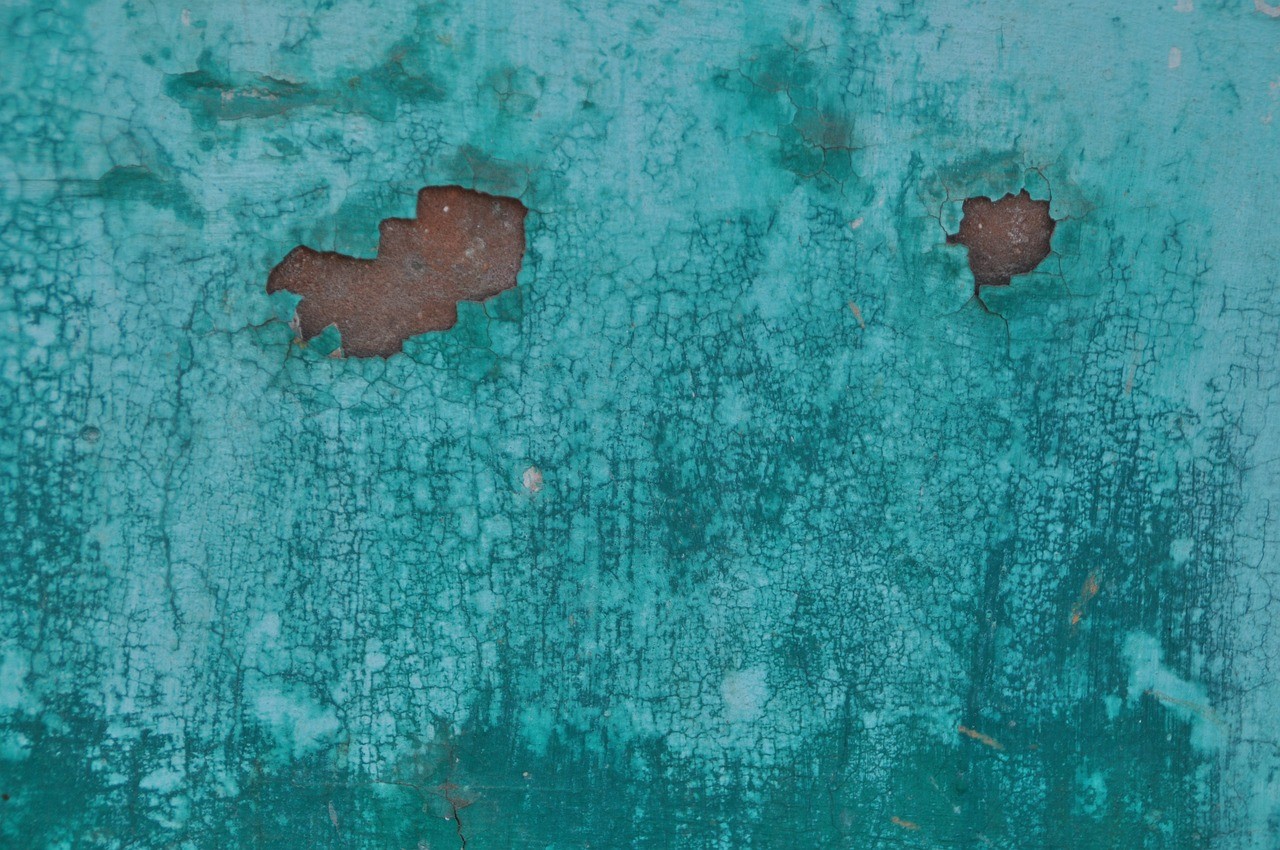

Surface defect detection. Say you are looking for misplaced material, wrinkles, or corrosion. Instead of inspecting every square inch of the part by the senior engineer, you could send a junior technician who would take several pictures or record a 1-minute video of the part. Before he is back to his desk media will be processed and only a few areas with suspected defects will be flagged for re-inspection. This will save time for your most qualified personnel and accelerate the inspection workflow.

Defect classification. Once the defect is found it may be tricky to interpret. What materials and tools it should be treated with? Is it outside the limits or it's not a defect at all?

Validation of assembly. Besides surface defects, the ML model can search for missing elements such as holes, nuts, bolts, rivets, and fasteners, or the presence of those where they are not expected.

The benefit stretching across all three use cases is the standardization of the acceptance criteria. Personnel in different factories or even from one shift to another can interpret defects differently. One factory could stand out as a quality champion simply because it doesn't record certain types of defects. Another plant may be overprocessing every scratch wasting time and increasing the scrap rate for no reason.

The good news is that ML doesn't care. It will apply the same algorithm consistently with only one difference: the more images of the defects it processes over time the smarter and more reliable it becomes.

What can you do for ML?

Any ML engineer will tell you that data is everything. To uncover those powers of ML you'll need a lot of images of the same kind of defect. Different people will quote different thresholds; from our experience, to get the simplest demo prototype you'll need at least 300 pictures or a minute-long video of the affected area. You can get them anywhere, from old discrepancy reports, from the mobile phones of your quality team, or CCTV cameras if you want! This is where you face the issue: your sample becomes inconsistent, patchy, and comes in all sorts of resolutions and dimensions.

The transformative role of AR

Setting the stage was important, now we discuss the unique benefits of AR.

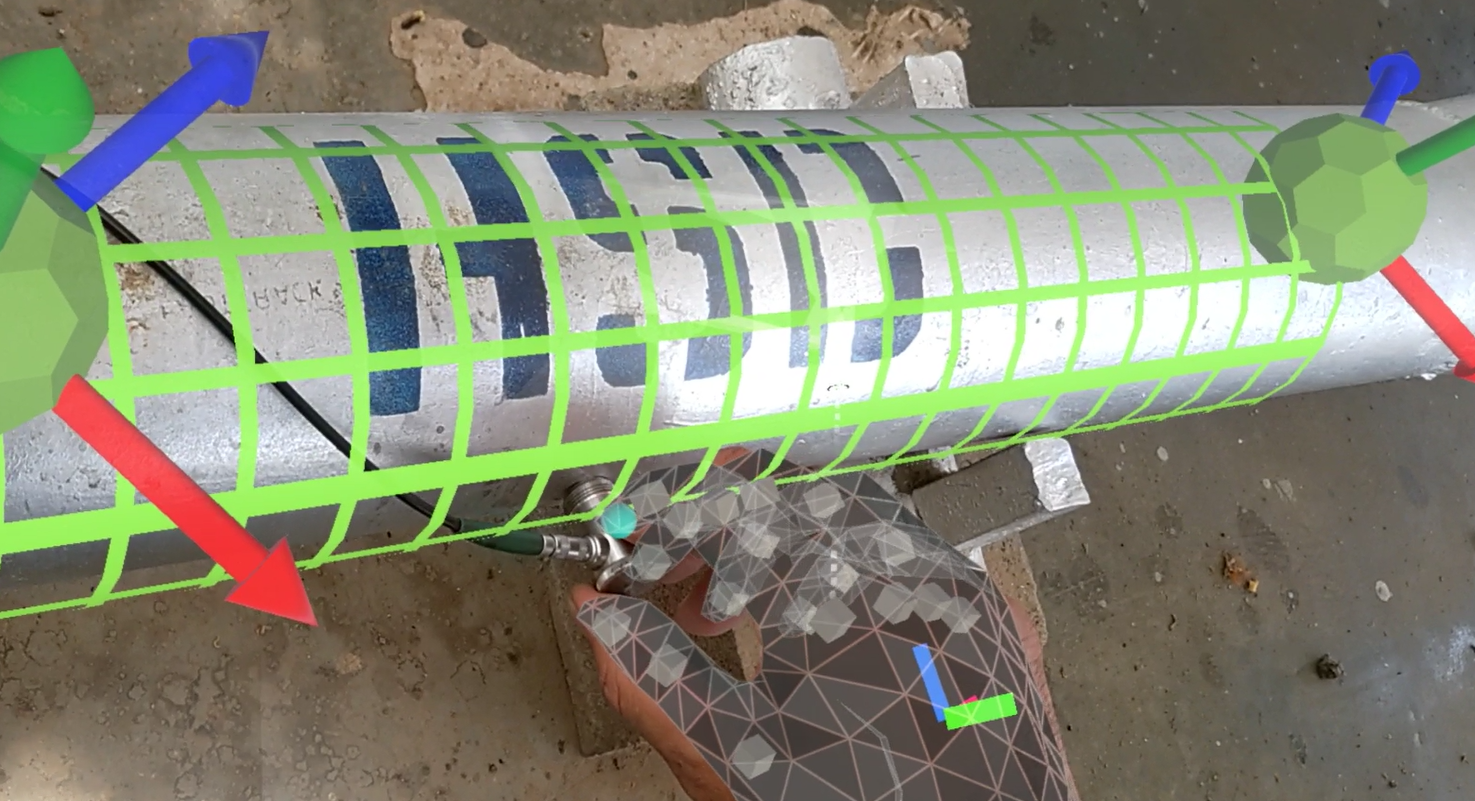

You shall use the AR headset both for collecting raw data (before training the model) and for validating the defects (after training the model). It provides three levels of convenience: ease of taking pictures, standardization of the dataset, and acceleration of labelling.

Convenience #1. AR goggles comfortably sit on your head and can be used once needed. You could take pictures hands-free, i.e. pressing only virtual buttons with your hands in the air. It doesn't matter if your hands are dirty or if you are wearing gloves. Headset removes almost all barriers to capturing those images.

Convenience #2. Hololens2 camera makes 8-MP still images and 1080p30 video which is more than enough to capture defects as small as 3 mm in diameter when looking from the 2 m (6.5 ft) distance. You could achieve higher accuracy by standing closer and playing with lighting or speed of movement. The key point is that your dataset will be homogenous which will make your data science team happier and scaling efforts more predictable.

Convenience #3. Once you've collected the data you'll need to label it, i.e. tag the defect in the picture so that the ML model knows it is a defect. Labelling is a tedious manual exercise that can be rarely avoided. Technicians could assign the defect type, severity etc., and mark its location through the headset right after taking the picture. If done correctly it will remove a significant burden off the labelling team and speed up model training.

Using model day-to-day

Several months into the process, you've collected and labelled data, trained, tested, and validated your first model. Now you'd like to start using it and this is where we again recommend using the AR headset.

If you need to check whether certain welded joints are excessively porous or if the wrinkle in the composite surface needs treatment, you could skip that part. Grab the headset, take a picture of the troubled spot, and receive the ML model verdict in a few milliseconds.

The mobility and flexibility of the AR headset allow for receiving the answer on the spot. We predict that from the imposed standard this approach will become a habit for the technicians after 2-3 weeks. Automating quality decisions in routine cases will gradually remove human bias and the impact of inconsistent training from the quality assurance process. As a result, the Cost of Quality will decrease alongside the production Cycle Time.

Devil's advocate

Wait, can't we do the same with the smartphone or tablet? They also have a camera and screen. Yes, you can potentially. You may think that if you can sacrifice hands-free advantage nothing else justifies more expensive specialized gear such as Hololens. Hands-free is the most obvious advantage of the headset but it is far from the biggest. Advanced smartphones have spatial sensors however they are part of a multifunctional device. While the headset's sensors and software are designed and optimized for spatial interactions. We wrote earlier why location-tracking capabilities are at the core of the technology itself and we encourage you to analyze this argument.

For this discussion, we would just say that provided you are working with large items like aircraft, ships, or residential buildings, contextualizing information (including quality information) in space will help your QA/QC teams process it faster supporting better decisions as a result.

How Apple Vision Pro that entered the market in 2024 will impact this development remains to be seen.

.svg)

.svg)

.png)

.png)

.png)

.png)

.png)